The Minimax Problem¶

Recall the interpolation error formula:

We can’t control the first factor (it depends on ), but we can minimize the second by choosing nodes wisely.

The Minimax Problem: Choose to minimize

The Solution: Chebyshev Roots¶

For , the Chebyshev roots are:

These points cluster near the endpoints of the interval—exactly where Runge’s phenomenon causes problems with equally spaced nodes!

Chebyshev Polynomials¶

![The first six Chebyshev polynomials T_0(x) through T_5(x). Note that each T_k oscillates between -1 and +1 exactly k+1 times on [-1, 1].](/MATH551/build/cheb-1408e2ff6392297ce6cc79f1bfab0c53.png)

The first six Chebyshev polynomials through . Note that each oscillates between -1 and +1 exactly times on .

Definition via Complex Exponentials¶

The most illuminating definition connects Chebyshev polynomials to Fourier series and complex analysis.

This definition directly links Chebyshev series to Fourier series and Laurent series—three fundamental tools connected through the change of variables .

Why They’re Polynomials¶

From the definition, we verify the first few:

Recurrence Relation¶

The recurrence follows from the complex exponential definition:

which gives:

By induction, each is a polynomial of degree exactly .

Key Properties¶

Roots: has roots at the Chebyshev nodes

Extrema: oscillates between -1 and +1 exactly times on

Leading coefficient: The coefficient of in is (for )

Minimax property: is the monic polynomial of degree with smallest maximum on

Proof of Optimality¶

The proof uses a contradiction argument based on the alternation property.

Proof 1

Let be any monic polynomial of degree , and suppose

Let , which is monic and alternates between at points .

At each :

If , then

If , then

So alternates sign at least times, meaning it has at least roots.

But has degree at most (both are monic of degree ), so it can have at most roots. Contradiction!

Transformation to Arbitrary Intervals¶

The Chebyshev nodes are defined for . For an arbitrary interval , we transform:

This:

Scales the Chebyshev points by (the ratio of interval lengths)

Shifts the center from 0 to

The minimum value of the node polynomial on becomes .

Chebyshev vs. Equally Spaced: Runge’s Function¶

Consider on .

Chebyshev vs. equally spaced nodes: Convergence comparison for polynomial interpolation. Chebyshev nodes achieve exponential convergence for smooth functions, while equally spaced nodes fail for Runge’s function.

| Nodes | Error behavior as |

|---|---|

| Equally spaced | Error grows without bound |

| Chebyshev | Error decreases exponentially |

This is the power of optimal node placement!

When to Use Chebyshev Interpolation¶

Chebyshev interpolation is particularly valuable for:

Spectral methods for differential equations

Gaussian quadrature for numerical integration

Approximating smooth functions with high accuracy

However, for most practical data fitting, piecewise polynomials (splines) are often preferred because:

They’re simpler to work with

They don’t require special node placement

They naturally handle non-smooth data

Chebyshev Series¶

Beyond interpolation, Chebyshev polynomials provide a powerful series representation for functions, analogous to Taylor series but with superior convergence properties.

Lipschitz Continuity¶

Any function is Lipschitz (by the mean value theorem), but not every continuous function is Lipschitz.

The Chebyshev Series Theorem¶

The coefficients are given by the integral formula:

Connection to Fourier Series

Under the change of variables , we have and:

So the Chebyshev coefficients are precisely Fourier cosine coefficients of !

Why Chebyshev Over Taylor?¶

| Aspect | Taylor Series | Chebyshev Series |

|---|---|---|

| Center | Single point | Entire interval |

| Convergence | Disk of convergence | Whole interval (uniform) |

| Truncation | Best near | Near-best everywhere |

| Complexity | via Vandermonde | via DCT |

For functions on an interval, Chebyshev series are almost always preferable.

From Interpolation to Approximation¶

So far, we’ve treated interpolation as a data-fitting problem: given points , find a polynomial passing through them. But there’s a more powerful perspective.

Key insight: If we have a function , we can sample it at Chebyshev nodes to get data, then interpolate. The natural question becomes: how well does the interpolant approximate the original function?

This shift—from fitting given data to approximating a known function—is the foundation of spectral methods. The Chebyshev interpolant becomes a computational stand-in for : we differentiate, integrate, or solve equations using instead of .

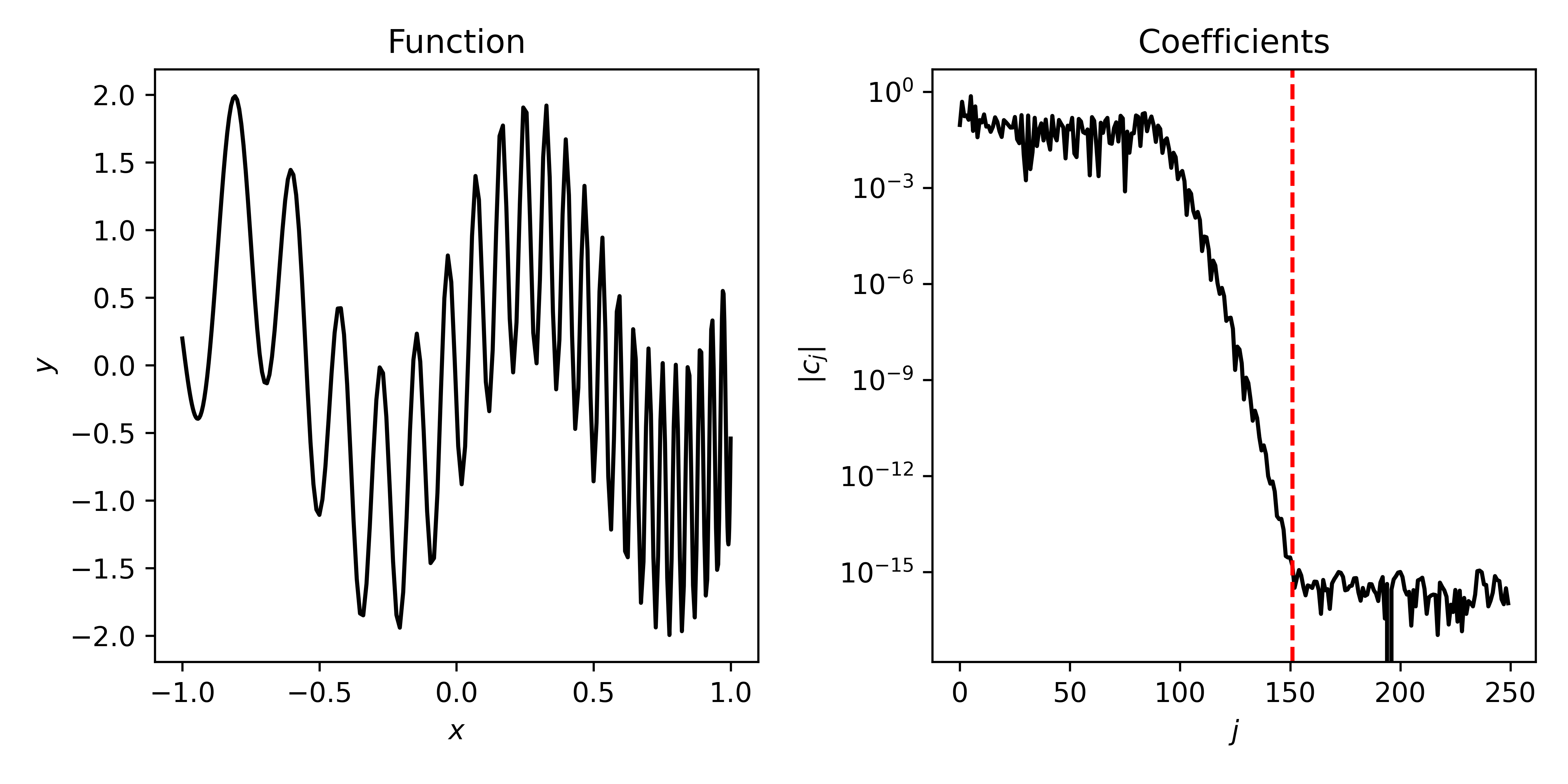

Convergence Rates: A Preview¶

The smoothness of determines the convergence rate:

| Smoothness | Coefficient Decay | Approximation Error |

|---|---|---|

| (algebraic) | ||

| analytic | (exponential) |

The jump from algebraic to exponential convergence when becomes analytic is dramatic—this is what makes spectral methods so powerful for smooth problems.

See the Spectral Accuracy chapter for the precise theorems, including:

The Bernstein ellipse and how complex singularities control convergence

Coefficient decay rates and their relationship to smoothness

Examples showing how to diagnose function smoothness from coefficients